Rendering Experiments

A collection of interesting rendering experiments that are unfinished and/or never made it into any kind of game. I’m specifically interested in new ways of representing / rendering game worlds.

Most of these written in Lobster, often with help of (compute/pixel) shaders, occasionally with some C++ helper code.

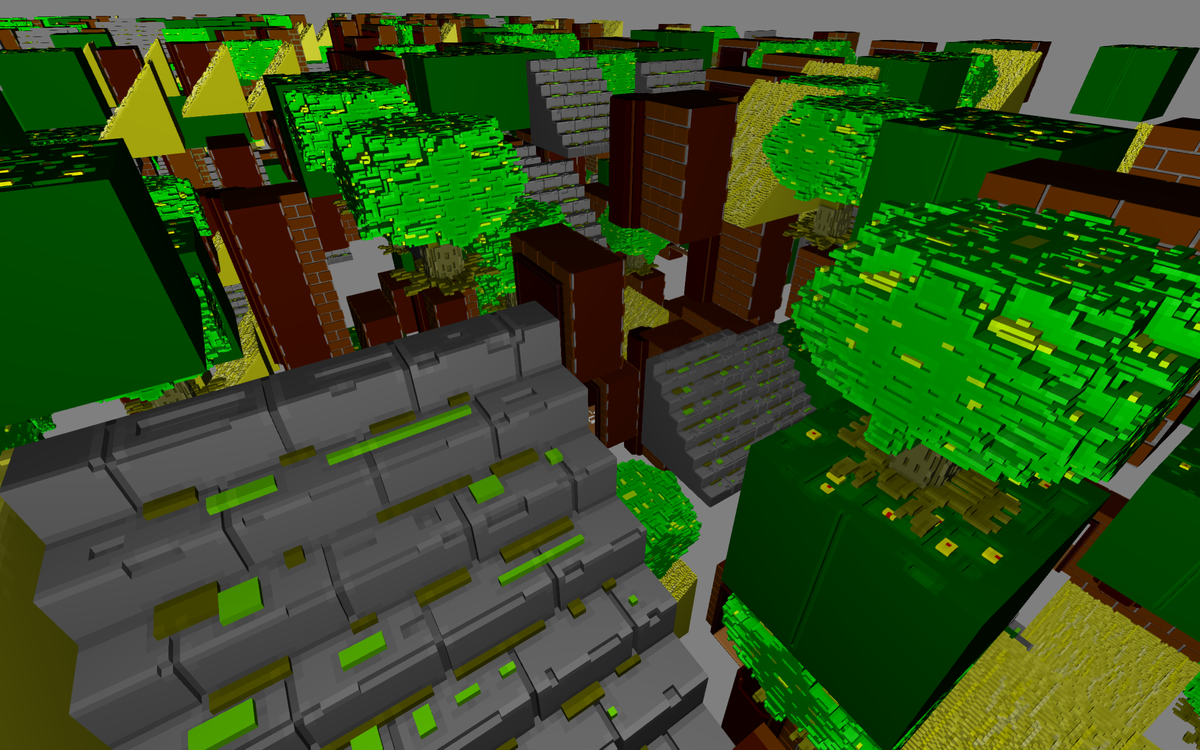

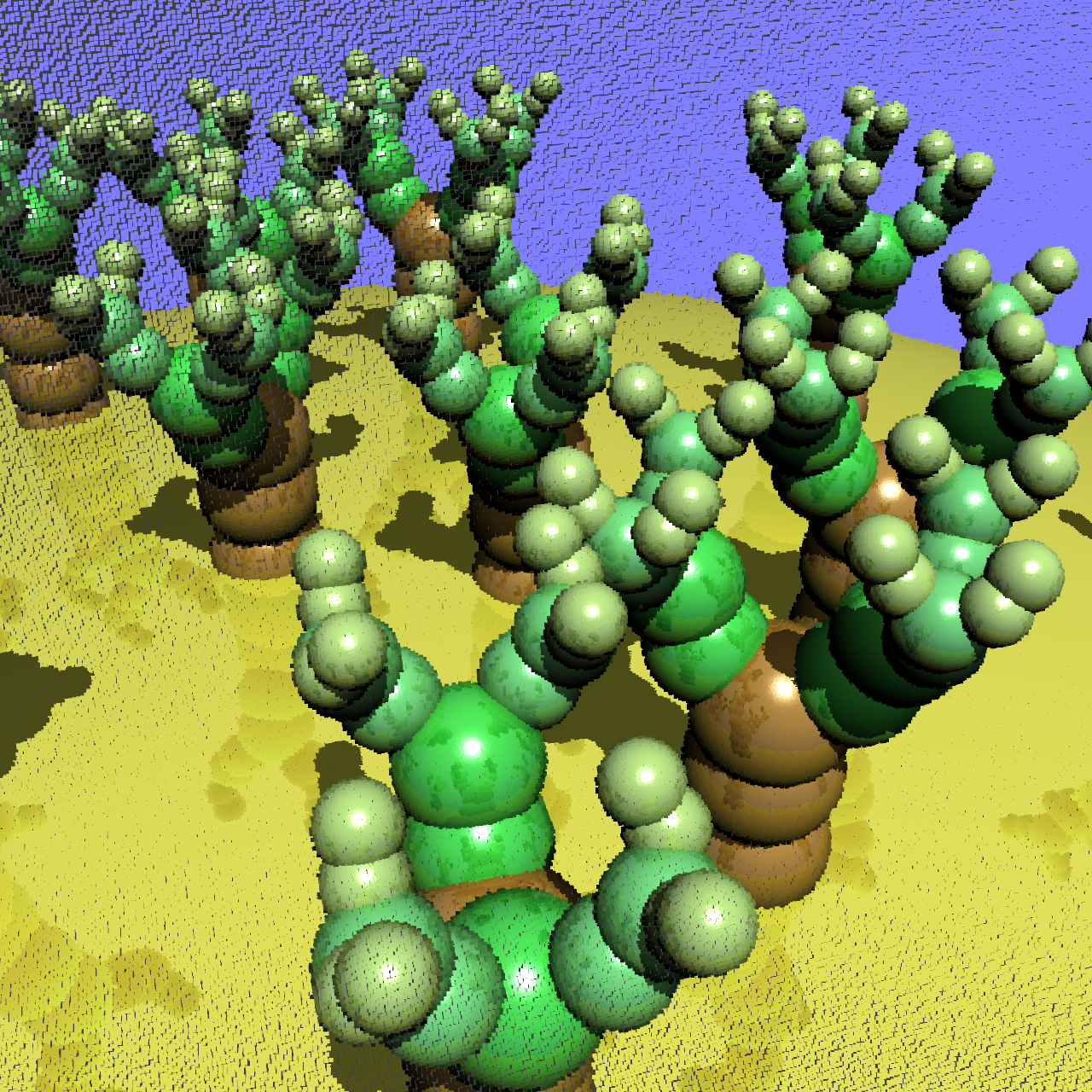

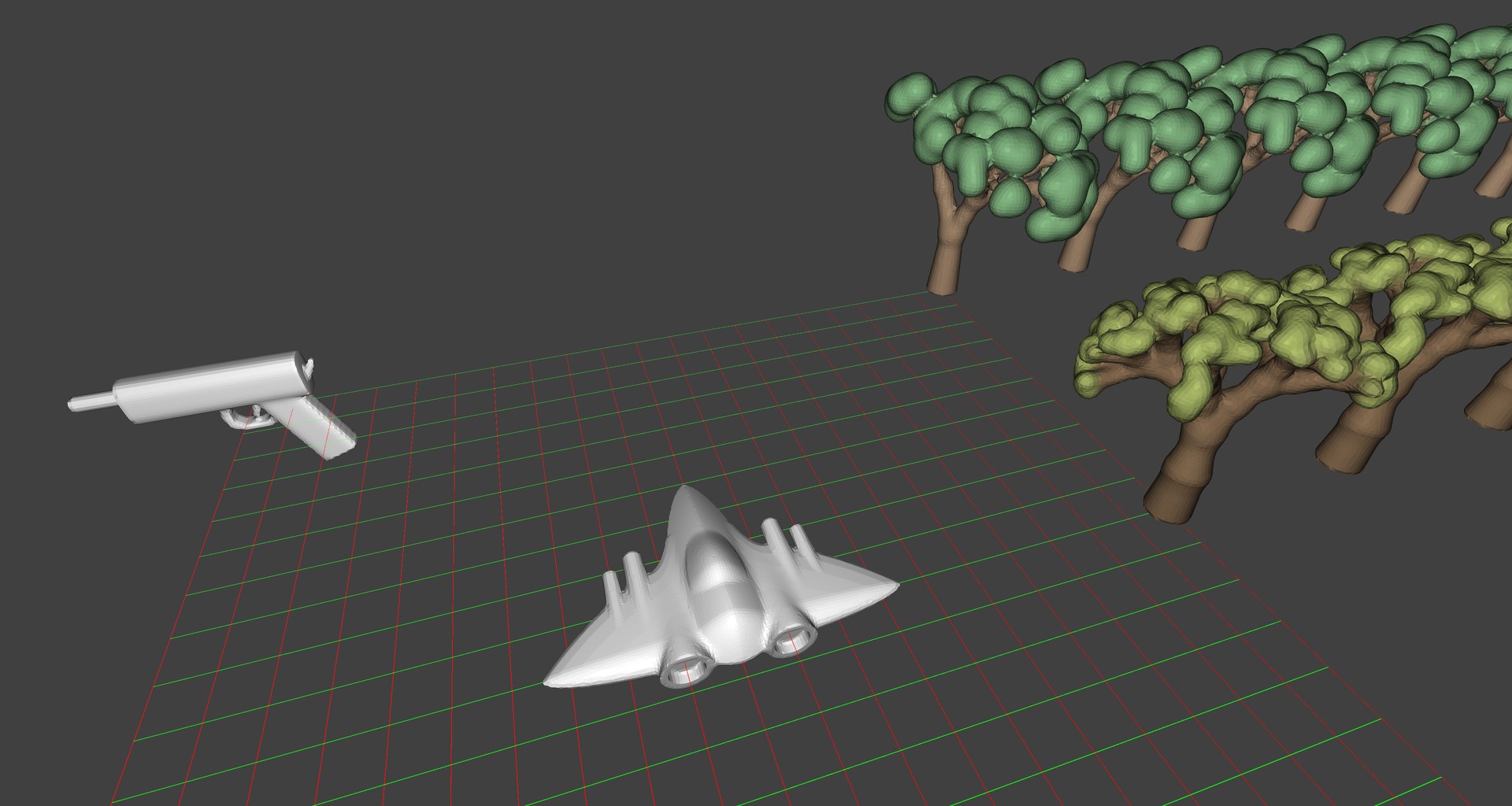

3D Texture ray-tracing (2017)

Simple 3D bresenham tracing of 3D textures for cheap 3D voxel sprites. Together with mip-mapping its easy to render hundred thousands of these for interesting scenes.

Mirroring (2017)

Repeatedly mirror blocks of pixels for interesting visuals from source image.

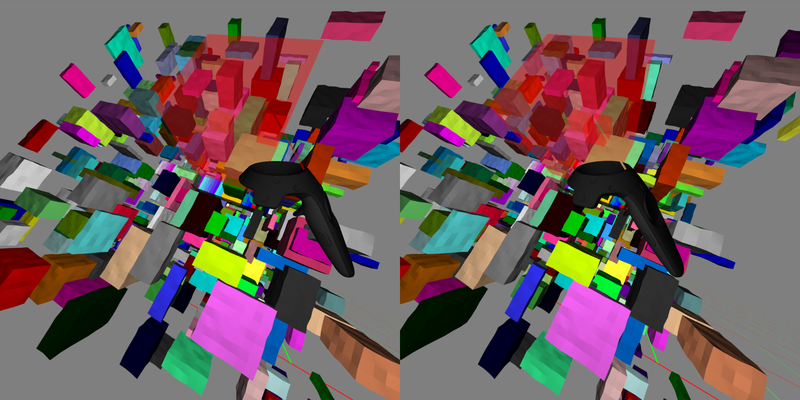

VR voxel sculpting (2016)

Quick VR “hello world” app to try out the Vive, and test Lobster’s VR integration :)

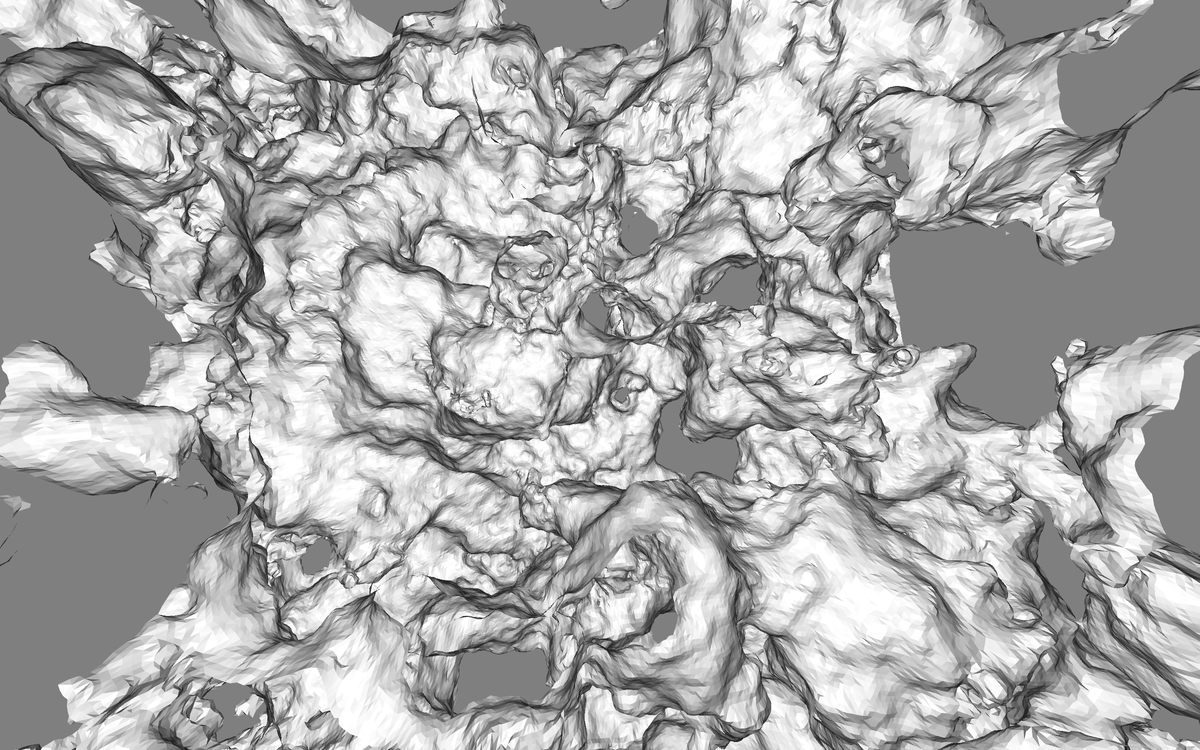

Stretch SDF (2016)

3rd of my recent attempts at caching SDF samples in world space to reduce the amount of them needing to be generated per frame.

We define a non-linear space mapping that assigns a grid to 3D space depending on screenspace (perspective): it may be hard to see, but in the above shot the camera would normally be in the center of all geometry, and polygons get bigger further from the center.

The SDF here is just simplex noise, but could be anything.

When the camera moves, we take the existing grid of point data, and for each point undo the space mapping, and then redo it for the new view, possibly moving the point to a new grid cell. If two points end up in the same cell, keep the closest.

Then, compute the SDF for all the new empty cells. Recompute mesh using marching cubes or similar. In early testing, for small camera movements, less than 1% of cells needed to be recomputed.

The cool thing is that it completely decouples display and camera from SDF generation. You have a mesh that is fast to render, and camera movements are ok, at worst they reduce detail of the view the further they go from the last time the mesh got recomputed. Meanwhile you can have other threads working at recomputing SDF data as needed, swapping out a mesh once a second instead of once a frame or so.

Seems promising, except that for high detail scenes you’d probably need a pretty crazy grid size.

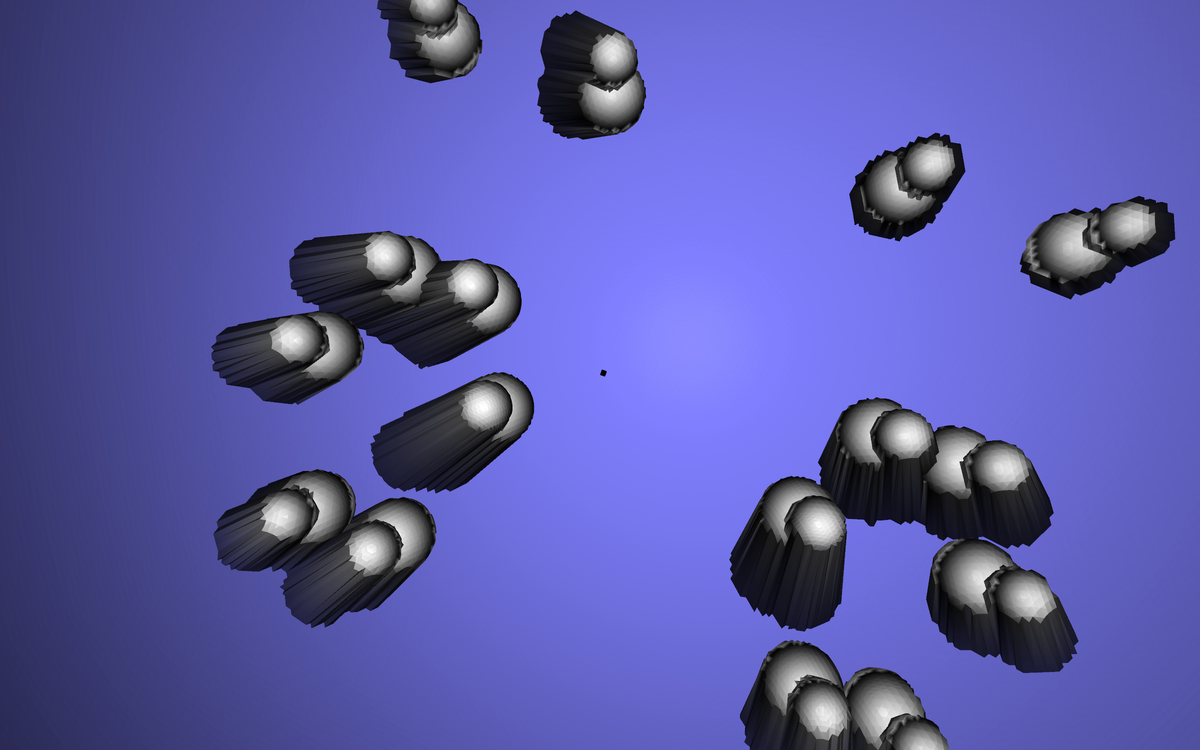

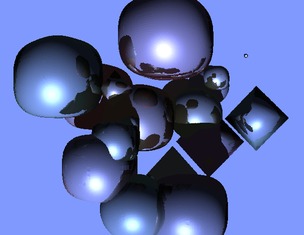

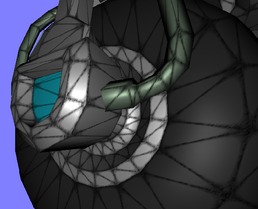

Camera space meshes (2016)

2nd attempt to cache SDF data. Here it is cached in camera space, meaning results of ray-marches/traces are stored in a spherical mesh around the camera, with verts pulled in/out from their unit sphere positions to reflect camera distance.

Then, when the camera moves an algorithm runs that culls far points on back-facing edges (these have now become invivisble due to parallax camera motion) and subdivides edges that have grown over a certain screen-space length threshold (these are parts of the scene that are now more visible than before). It then computes new rays for thew edges.

This algorithm so far is pretty slow and buggy, so not sure if it is worth anything :)

What you see above is after the camera has moved some, without recomputing edges.

Camera space point cubemaps (2015)

1st attempt caching ray data, and one of the simplest: store world-space points in a cubemap around the camera, then re-project to a new cubemap when the camera moves. All done in a compute shader, so tries to deal with overlapping points in a cubemap cell using atomics. Re-trace empty cells afterwards.

Promising, except for the fact that the point don’t have any geometric connectivity information, meaning that you get weird artefact when you mix camera zoom (cells open between existing ones of a surface) and parallax (points of one surface move behind another, but could take up empty cells). This is hard to resolve even with heuristics, so prompted me to try the mesh based approach above.

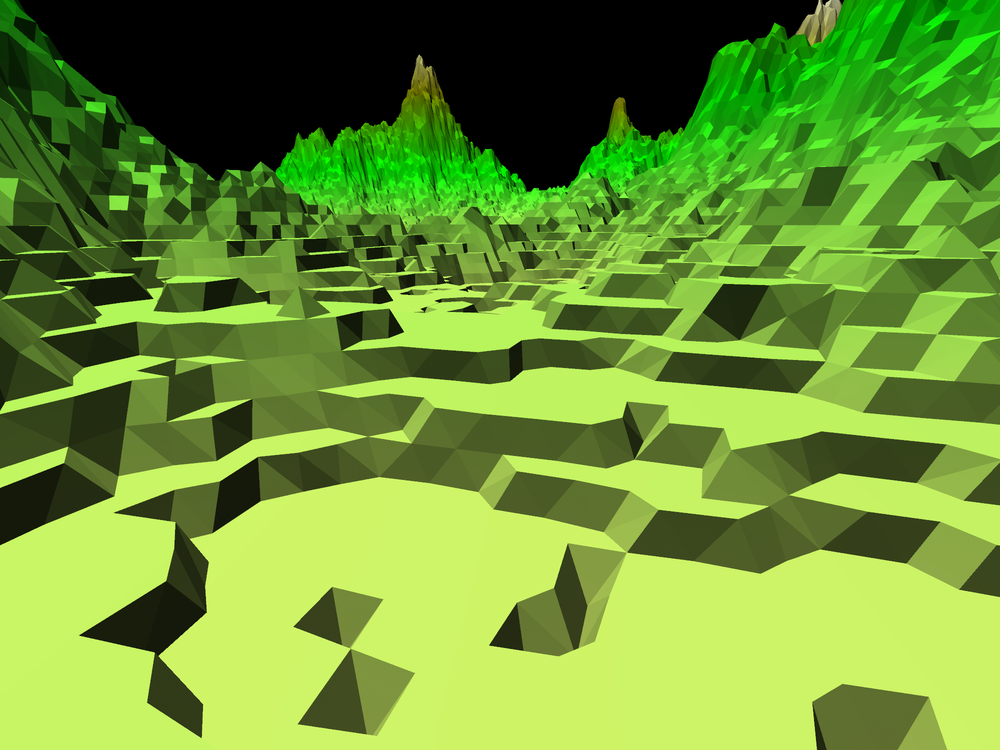

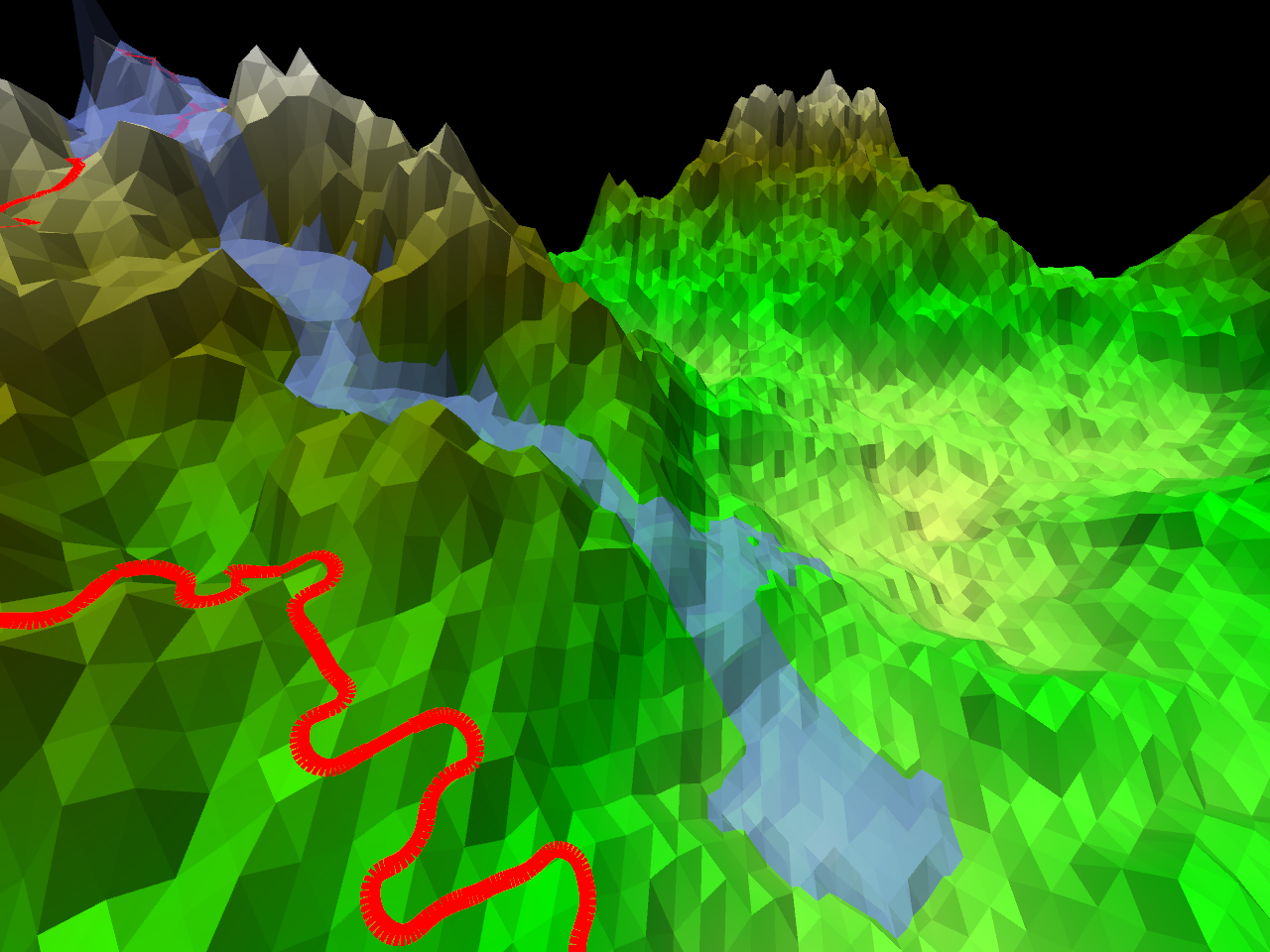

Auto terrass (2015)

As an alternative to cubic voxels, slanted terrasses as a visually friendlier alternative.

Extra DOF (2015)

Most cameras don’t give you nice depth of field (small sensors or lack of low f-stop), so here my idea was to take an image with a small amount of DOF, measure the relative sharpness of each pixel, then increase the blurryness in areas with low sharpness for an increased DOF effect. Works to some extend, but not without a lot of artifacts.

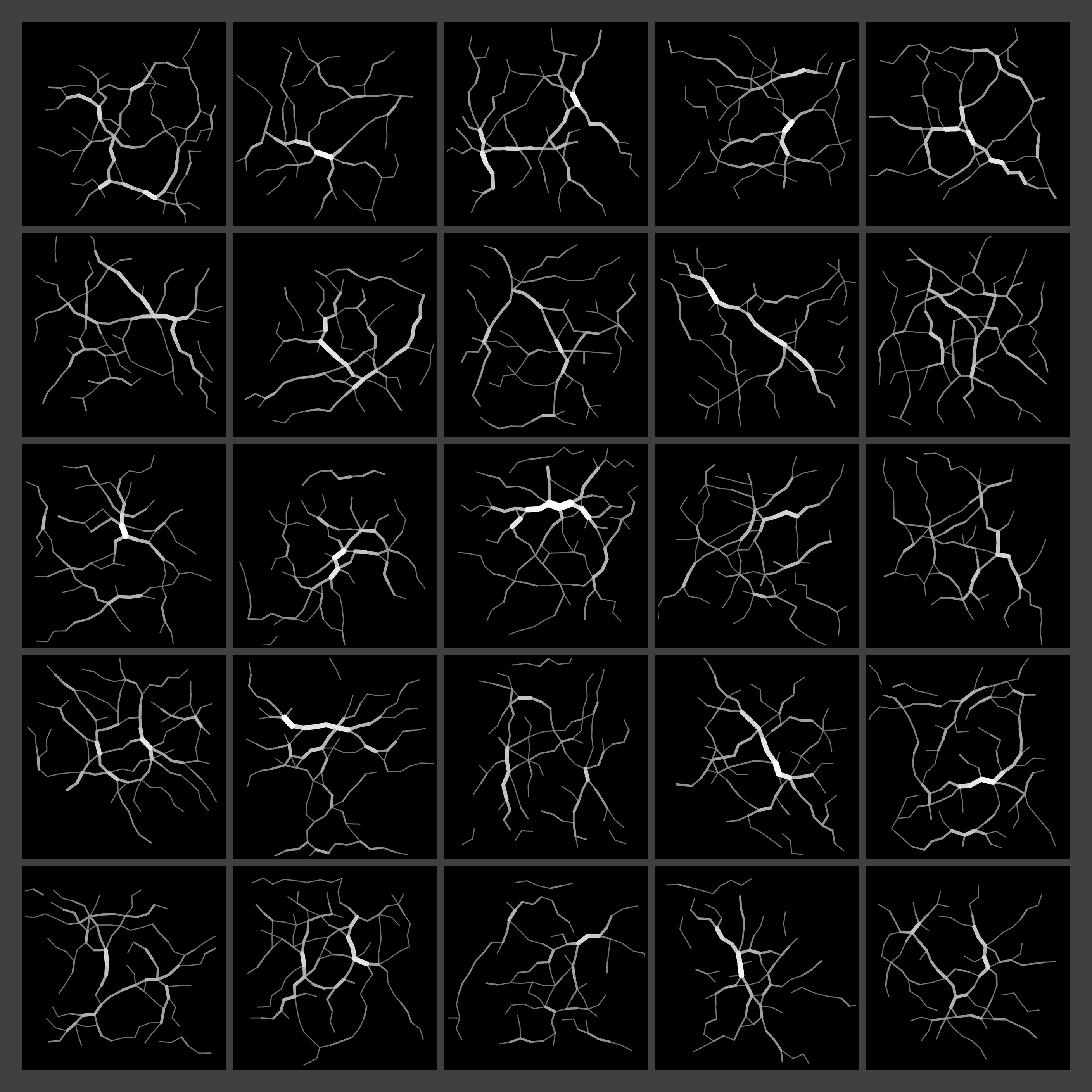

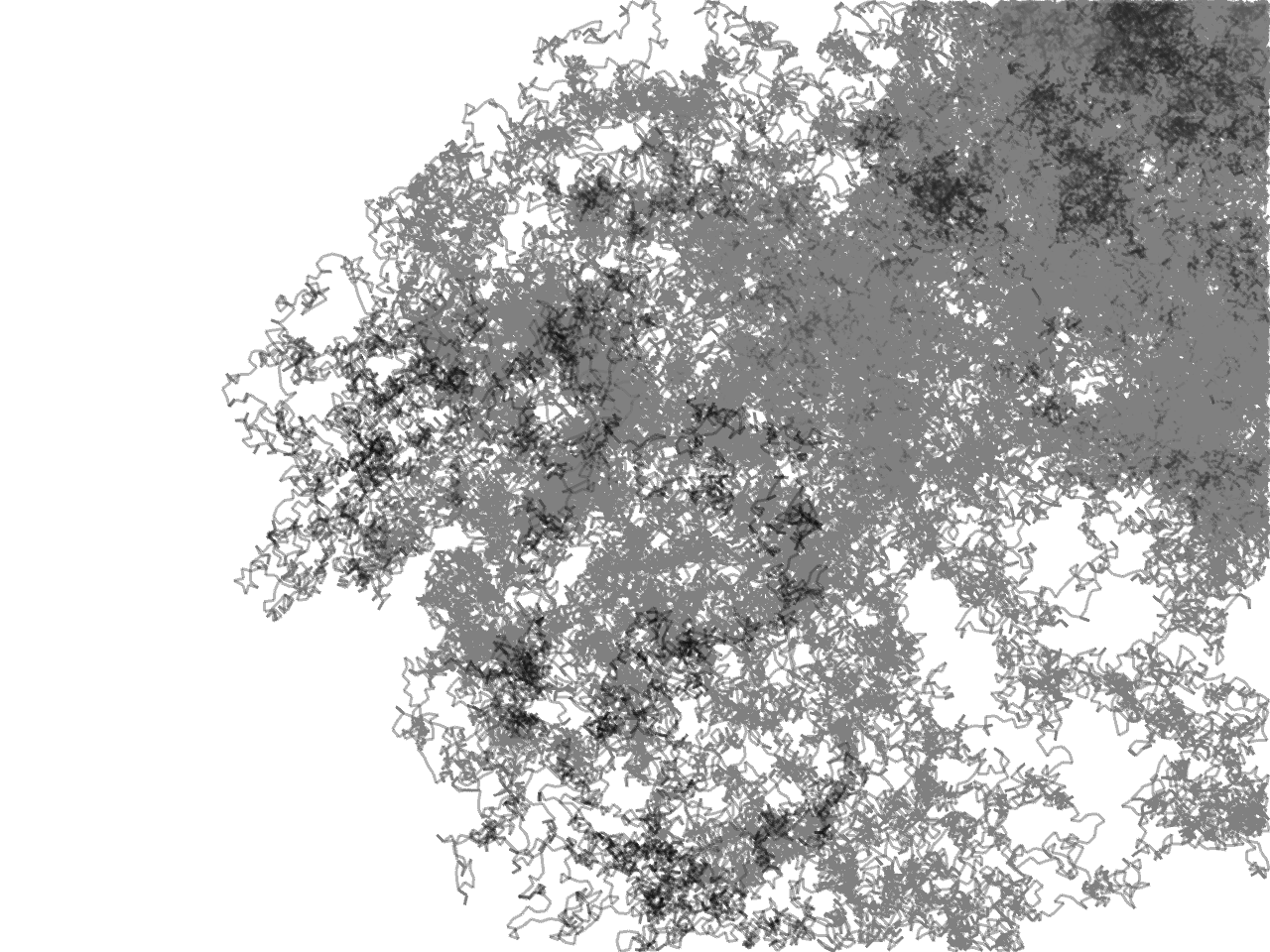

Rnd grid pathfinding (2015)

Algorithm to make a pleasant random grid out of points (for more organic game worlds), which I then later enhanced with some random path finding (prefer paths that have already been followed) for automatic road networks.

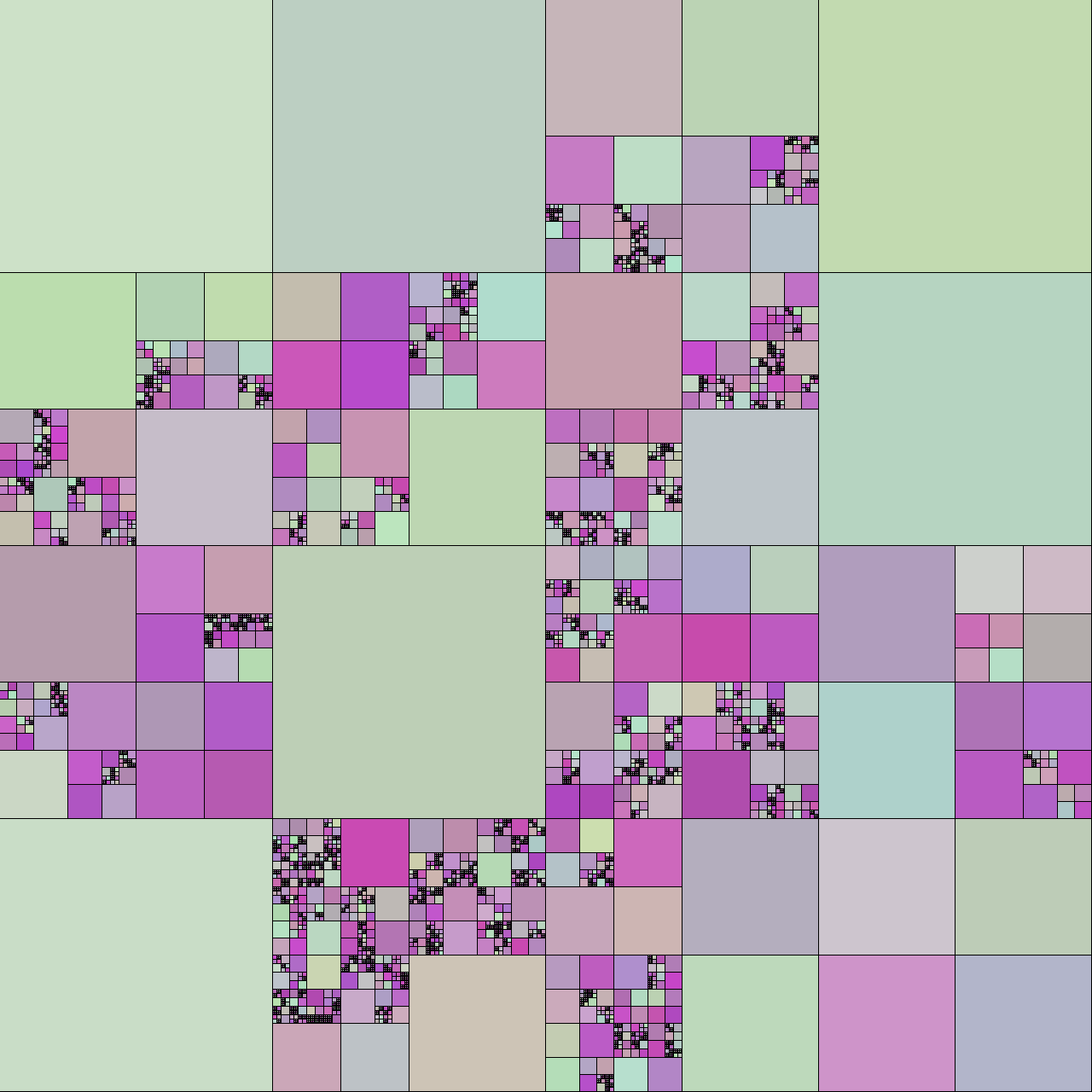

Quad tree abstraction (2014)

For each quad-tree node, measure error from the average color. If below a certain threshold, turn the whole node that color.

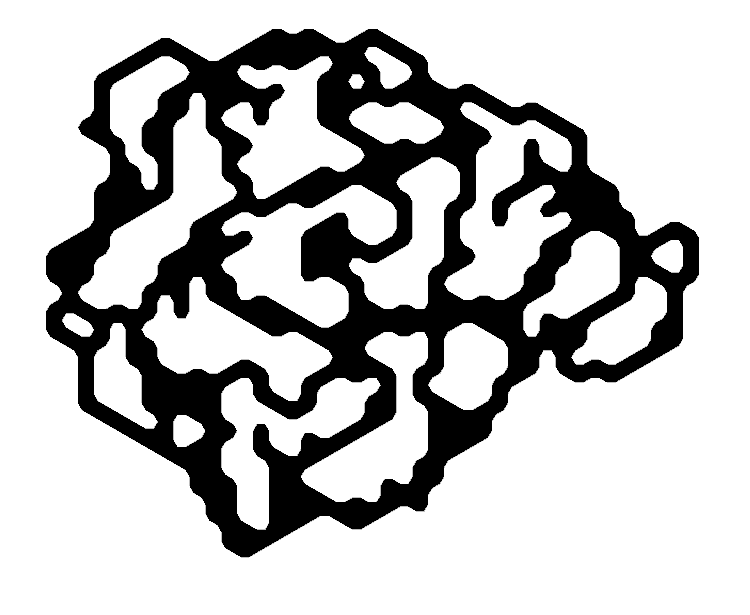

Smooth hex rendering (2014)

Simple way to make hex grids into more organic smooth looking shapes, for use in procedural level design or hex sprites.

Water sim (2013)

Water simulation that lets water flow into adjacent grid-cells whenever there is a height difference. Intended for some kind dam building game that’s in still in the back of my mind.

Randomwalk (2013)

Nice Pixels (2013)

![]()

Nearest neighbor pixels are a bit harsh (but give that nice pixel-art look), any kind of interpolation is smooth but doesn’t look very neat when zoomed in. This shader tries to achieve the best of both worlds.

Quadtree (2012)

Raytracing (2012)

Simple sphere ray-tracing to try and see just how much you can do of it in a compute shader. Experimented with a variety of strategies for traversing a scene stack (occupancy is a bitch), and representing the scene as a texture.

Meshgen (2011)

This is the built-in SDF to mesh converter part of the Lobster language/engine. It is very simple but effective, it adds SDFs one by one to a distance grid. Here’s an example of what the code for the above image looks like.

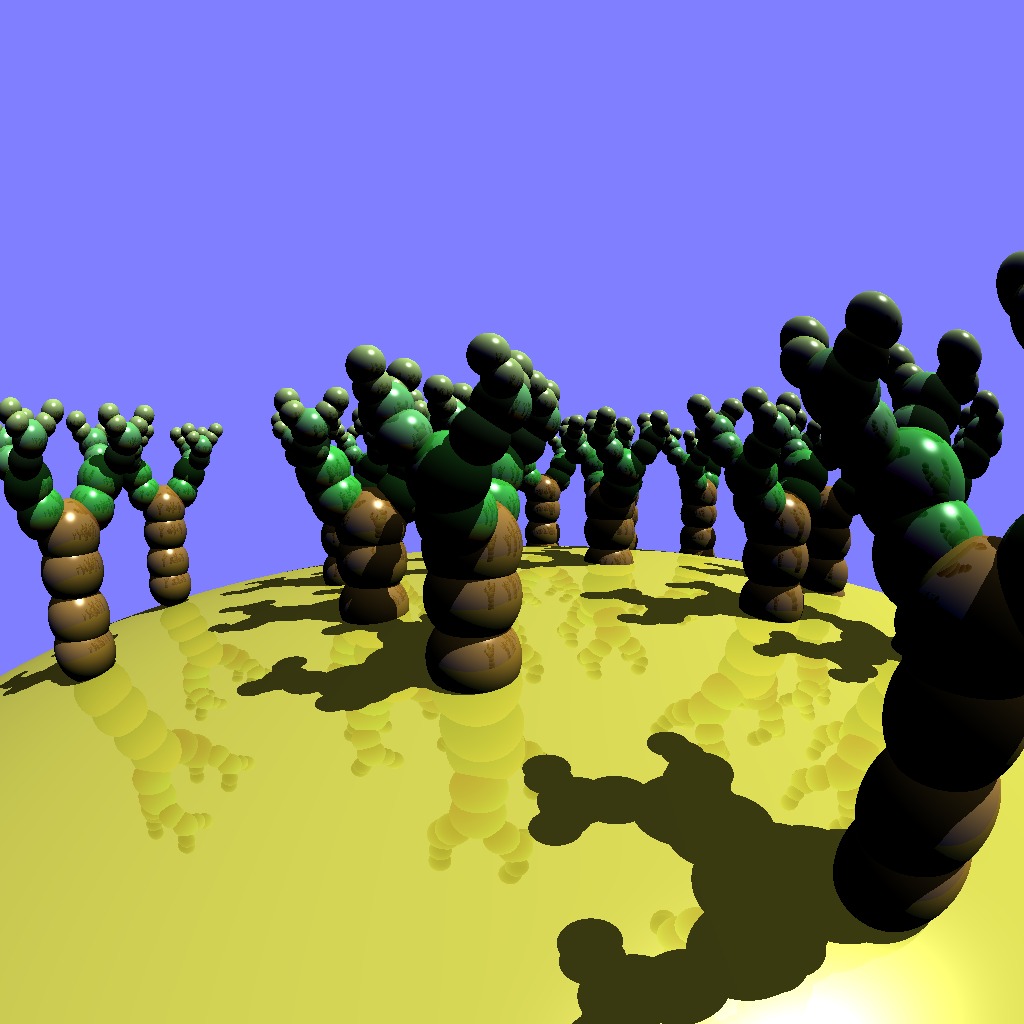

Octrace (2008)

An experiment in rendering random implicit functions into an octree for use in fast raytracing. Added the usual effects (lighting, reflection, shadows, ambient occlusion).

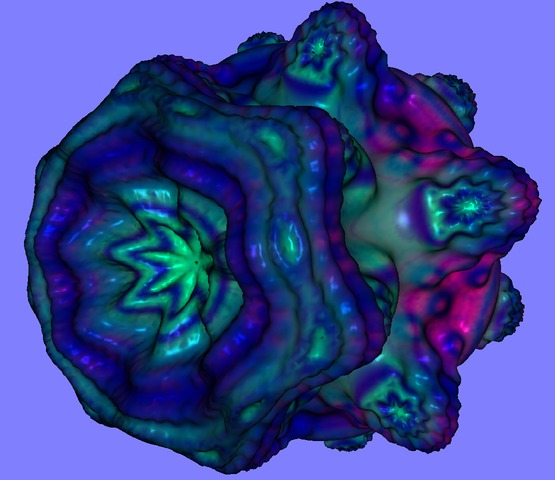

Implicit Functions on the GPU (2008)

Implicit functions are a lot of fun to create geometry with, but a bit computationally intensive for larger volumes, so we do it on the GPU. Marching Cubes then makes it into a mesh that can be rendered traditionally (very fast).

Geometry Shaders (2007)

some fun geometry shaders: fisheye projection (hardcoded subdiv, since no tesselator available), and triangle edge based sketching.

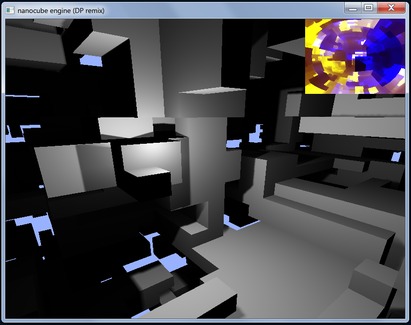

NanoCube (2005)

I started the cube engine(s) with lofty goals of utter simplicity and cubic worlds, but but I failed on both those accounts. Nanocube was a project purely for the sake of trying to write a full FPS game in the fewest lines of code possible, making everything (including animated characters) out of cube. This failed too, when Lee added his dual parabolic shadowmaps.

Old

Some very old engine prototypes.